Designing AI/ML/GenAI Systems: Navigating the Nuances of a New Paradigm

Artificial Intelligence, Machine Learning, and Generative AI are transforming industries and creating unprecedented opportunities. However, building successful AI/ML/GenAI systems requires a different mindset and a more cautious approach than traditional software development. The stakes are higher, the complexities deeper, and the potential pitfalls more numerous.

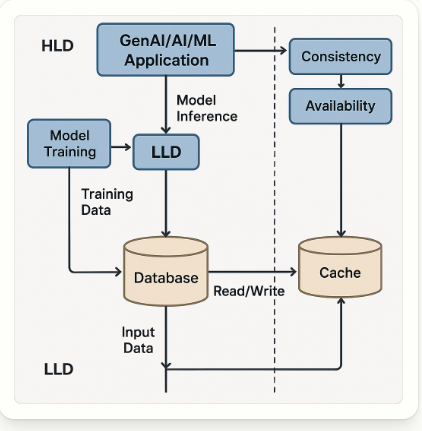

This blog will explore the extra precautions and considerations that must be incorporated into the entire system design lifecycle of AI/ML/GenAI projects, from High-Level Design (HLD) and Low-Level Design (LLD) to requirements gathering and testing. We’ll also examine the novel non-functional requirements that demand attention and the specific adaptations required in system design elements like databases, memory management, consistency, availability, and caching.

Extra Precautions in Requirements Design for AI/ML/GenAI

Traditional requirements gathering often focuses on explicit user needs and functional specifications. AI projects demand a broader perspective:

Requirements Design: Go Beyond Functional Needs

AI/ML systems don’t just need “what to do”; they need “how well to do it,” under what data assumptions, bias boundaries, and ethical constraints.

Additional Requirement Considerations:

- Data Requirements: Define source, volume, quality, labelling needs, and retraining frequency.

- Model Performance: Accuracy, latency, confidence thresholds, fairness metrics, drift tolerance.

- Explainability & Auditability: Should the model’s decision be explainable? For whom?

- Bias & Fairness: Define what constitutes bias in the context of the application.

- Human-in-the-loop (HITL): When is human review required? How is feedback looped into retraining?

- Compliance & Privacy: GDPR, HIPAA, AI Act — ensure data handling and inference are regulation-compliant.

High-Level Design (HLD): Embrace Modular AI Thinking

HLD must account for both software architecture and model architecture. Think in terms of model pipelines, monitoring, and decoupling model lifecycle from product lifecycle.

Extra HLD Precautions:

- Model as a Microservice: Use containerized, stateless model endpoints (e.g., FastAPI, TorchServe).

- Model Versioning & Registry: MLflow, Weights & Biases, or custom versioning for reproducibility.

- Retraining Pipelines: Design CI/CD + CT (Continuous Training) with triggers based on drift or feedback.

- Feature Store Integration: Design a central place to manage and reuse features.

- Observability: Include logs, metrics, drift detection, explainability dashboards.

Low-Level Design (LLD): Think Data Flow + Model Flow

Extra LLD Precautions:

- Data Validation Layers: Add preprocessing, anomaly detection, and schema validation blocks.

- Model Input/Output Validation: Use type-safe inputs, handle nulls, and log edge cases.

- Failure Handling: LLD must plan fallback strategies if model fails (default answer, human escalation).

- Hybrid Models or Heuristics: Mix rule-based + model-based decisions where accuracy is low.

- Token & Memory Management for GenAI: Limit input length, optimize prompts, and chunk large data.

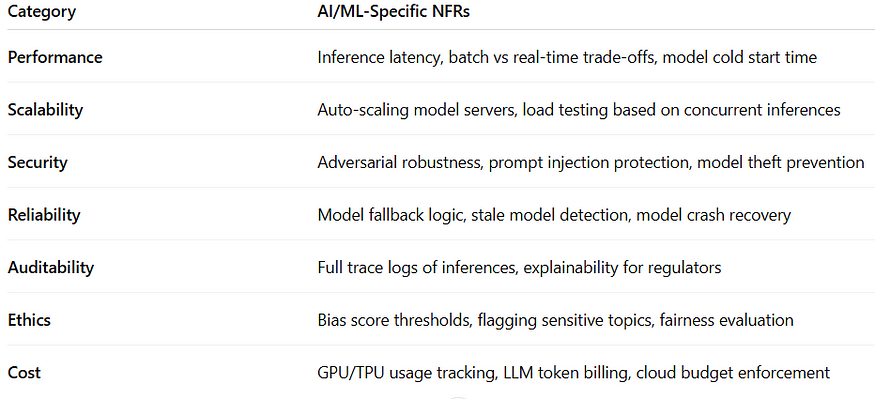

Non-Functional Requirements (NFRs): Redefine for AI/ML

AI/ML and GenAI projects bring new types of NFRs not usually found in traditional applications.

New NFRs to Add:

Data Requirements and Quality:

- Explicitly define data requirements upfront: Specify data sources, data formats, data volume, data quality metrics (accuracy, completeness, consistency), and data lineage. Don’t just assume data will be “available.”

- Assess bias and fairness: Consider potential biases in the data that could lead to discriminatory outcomes. Define strategies for mitigating these biases.

- Address data privacy and security: Carefully consider data privacy regulations (GDPR, CCPA) and security requirements. Implement appropriate data anonymization, encryption, and access control measures.

Model Performance and Interpretability:

- Define clear performance metrics: Specify acceptable levels of accuracy, precision, recall, F1-score, and other relevant metrics.

- Establish interpretability requirements: Consider how important it is to understand why the model makes certain decisions. Some applications require highly interpretable models, while others may be more tolerant of “black box” approaches.

- Address model drift: Plan for how the model’s performance will be monitored and maintained over time, as data distributions change.

Ethical Considerations:

- Conduct an ethical impact assessment: Identify potential ethical risks and unintended consequences of the AI system.

- Define ethical guidelines: Establish clear guidelines for responsible AI development and deployment.

- Implement mechanisms for transparency and accountability: Ensure that the system’s decisions are explainable and that there are mechanisms for addressing errors or biases.

Scalability and Performance:

*Plan carefully on the current data and future scaling data.

- Define clear performance targets: Specify acceptable response times, throughput, and resource utilization.

- Consider scalability requirements: Plan for how the system will handle increasing data volumes and user traffic.

New Non-Functional Requirements (NFRs) for AI/ML/GenAI

Traditional NFRs (security, performance, usability) are still relevant, but AI systems introduce new NFRs that require careful consideration:

Explainability/Interpretability:

- Definition: The degree to which a human can understand the reasoning behind an AI system’s decisions.

- Importance: Crucial for building trust, ensuring accountability, and complying with regulations in high-stakes applications (e.g., healthcare, finance).

- Measurement: Can be measured using techniques like feature importance analysis, rule extraction, and counterfactual explanations.

Fairness/Bias Mitigation:

- Definition: The extent to which the AI system avoids discriminatory outcomes for different demographic groups.

- Importance: Essential for ethical AI development and preventing unintended harm.

- Measurement: Can be measured using metrics like disparate impact, equal opportunity, and predictive parity.

Robustness:

- Definition: The ability of the AI system to maintain its performance in the face of noisy, incomplete, or adversarial data.

- Importance: Crucial for real-world deployments where data quality is often imperfect.

- Measurement: Can be measured using techniques like adversarial testing and sensitivity analysis.

Data Security and Privacy:

- The design should consider privacy and data.

Definition: Protecting sensitive data from unauthorized access, use, or disclosure, while adhering to privacy regulations.

Importance: Essential for maintaining user trust and complying with legal requirements.

Measurement: Can be measured using metrics like data breach frequency, compliance audits, and user consent rates.

Model Governance:

This must be taken into consideration.

- Definition: The processes and policies for managing the entire lifecycle of AI models, from development to deployment and maintenance.

- Importance: Ensures responsible and ethical AI development and deployment.

- Measurement: Can be measured by tracking model lineage, monitoring model performance, and conducting regular audits.

Real-Time Adaptability of System

Description: For the given situation, should have a adaptability.

- Example: AI assistant, real time recommendation.

New Testing Considerations for AI/ML/GenAI

Traditional software testing techniques are not sufficient for AI systems. New testing methods are needed to address the unique characteristics of AI/ML/GenAI:

Data Testing:

- Data quality testing: Verify the accuracy, completeness, consistency, and validity of the training and test data.

- Bias testing: Assess the model’s performance across different demographic groups to identify and mitigate bias.

- Adversarial testing: Evaluate the model’s robustness to adversarial attacks and noisy data.

Model Testing:

- Performance testing: Measure the model’s accuracy, precision, recall, and F1-score on various datasets.

- Explainability testing: Assess the model’s interpretability and identify the factors that influence its decisions.

- Security testing: Evaluate the model’s vulnerability to attacks, such as model inversion and adversarial examples.

System Testing:

- Integration testing: Verify that the AI components integrate seamlessly with other parts of the system.

- Usability testing: Assess the user experience and identify areas for improvement.

- Performance testing: Measure the system’s overall performance, including response times, throughput, and scalability.

A/B test: Perform to measure performance between model versions.

Testing Strategy: Not Just Code, Test the Model Too

Testing in AI/ML systems must combine traditional QA with ML-specific testing.

Expanded Testing Areas:

- Unit Tests for Pipelines: Test data transformation, not just final output.

- Model Accuracy Tests: Evaluate precision, recall, F1, and business impact metrics.

- Bias & Fairness Tests: Stratified testing by demographic segments.

- Prompt Testing (GenAI): Prompt templates, few-shot consistency, hallucination rate.

- Regression Testing: Ensure model performance doesn’t degrade with new versions.

- Drift Detection: Setup real-time or batch tests for distributional drift in data.

- Security Tests: Adversarial testing, injection attacks, model jailbreaks.

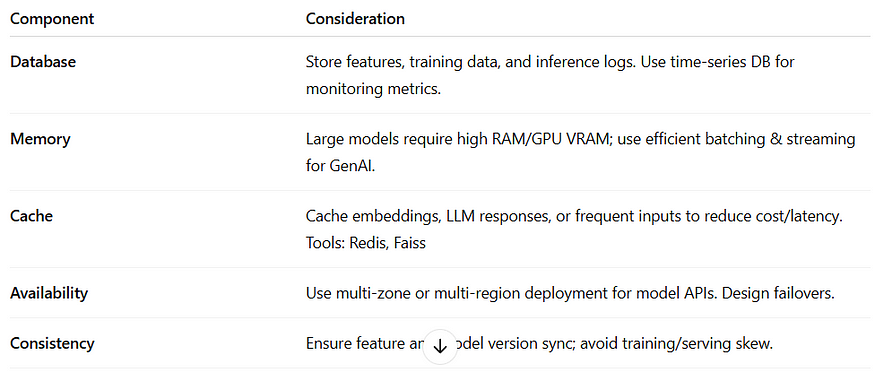

Precautions in System Design: Database, Memory, Consistency, Availability, Cache

Designing the underlying infrastructure for AI/ML/GenAI systems requires careful attention to various factors:

Database:

- Data storage requirements: Choose a database that can handle the volume, velocity, and variety of data generated by AI systems. Consider options like NoSQL databases, graph databases, and specialized AI data stores.

- Data indexing and querying: Implement efficient indexing and querying strategies to enable fast retrieval of relevant data.

- Data versioning and lineage: Implement mechanisms for tracking data versions and lineage to ensure reproducibility and accountability.

- Data integrity and validation: Enforce data integrity constraints and implement data validation procedures to prevent errors.

Memory:

- Memory management for model training: Allocate sufficient memory for training large AI models. Consider using techniques like gradient accumulation and model parallelism to reduce memory footprint.

- Memory optimization for inference: Optimize memory usage during inference to minimize latency and improve throughput. Consider techniques like model quantization and pruning.

- Memory leak detection: Implement tools and processes for detecting and preventing memory leaks.

Consistency:

- Data consistency: Ensure data consistency across different components of the AI system, especially when dealing with distributed data stores.

- Model consistency: Ensure that all instances of a deployed model are consistent with the latest version.

- Eventual consistency: Accept and adapt eventual consistency pattern if real-time performance or a massive scaling requirement are must

Availability:

- High availability architecture: Design the system to be highly available, with redundancy and failover mechanisms in place.

- Disaster recovery: Develop a disaster recovery plan to ensure business continuity in the event of a system failure.

- Monitoring and alerting: Implement comprehensive monitoring and alerting to detect and respond to issues proactively.

Caching:

- Caching strategies: Use caching to improve the performance of frequently accessed data and model predictions. Consider different caching strategies, such as in-memory caching, content delivery networks (CDNs), and database caching.

- Cache invalidation: Implement effective cache invalidation policies to ensure that the cache remains consistent with the underlying data.

Model Deployment: It must be taken care if there is a high load or low latency.

Integration of RAG pattern, so the models has real time data knowledge

AI/ML/GenAI System Design: Key Takeaways

- Requirements are King: Meticulously define data requirements, performance metrics, and ethical considerations upfront.

- Embrace New NFRs: Treat interpretability, fairness, robustness, and data governance as first-class citizens.

- Test, Test, and Test Again: Employ specialized testing techniques to validate data quality, model performance, and system behavior.

- Architect for Scalability and Reliability: Carefully design the underlying infrastructure to handle the unique demands of AI/ML/GenAI workloads.

- Data security must be taken care of

Building successful AI/ML/GenAI systems requires a commitment to responsible innovation and a willingness to adapt traditional development practices to the unique challenges of this new paradigm. By paying close attention to these extra precautions and considerations, you can increase the likelihood of delivering AI solutions that are not only powerful but also reliable, ethical, and beneficial to society.

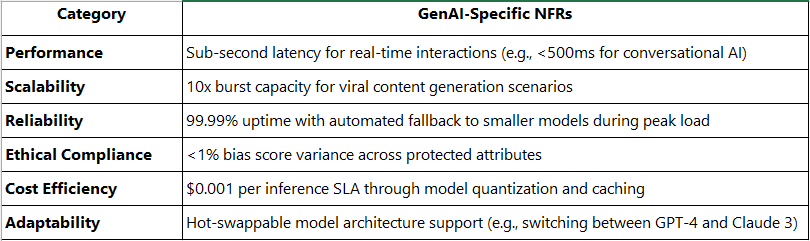

New Non-Functional Requirements for GenAI

Emerging Best Practices

AI-Specific CI/CD:

- Model versioning with MLflow

- Automated red teaming in staging environments

Energy Monitoring:

- Track kWh per inference using tools like CodeCarbon

- Optimize via pruning (e.g., remove 20% least important neurons)

Hybrid Human-AI Loops:

- Implement fallback to human reviewers when confidence <85%

- Real-time feedback ingestion for online learning

Final Thoughts

Building robust AI/ML or GenAI systems is more than just training models. It demands a shift in how we define requirements, how we design systems at all levels, and how we plan for non-functional behavior and testing.

To future-proof your architecture:

- Integrate model observability and drift monitoring from Day 1.

- Design for evolution and feedback loops — ML systems are living systems.

- Be mindful of ethical and regulatory obligations — it’s not just about performance.

- Invest in data quality and infrastructure readiness as much as in model tuning.

By embedding these considerations into your system’s DNA, you’ll build AI/ML solutions that are scalable, ethical, robust, and production-ready.

#AIML #GenAI #SystemDesign #AIArchitecture #MLPipeline #SoftwareArchitecture #AIEngineering #ModelOps #MLOps #LLMops #AIRequirements #HLD #LLD #MLDesign #AICompliance #AIUX #PromptEngineering #FeatureEngineering #ModelTesting #NonFunctionalRequirements #ModelMonitoring #DriftDetection #BiasDetection #ExplainableAI #FairAI #AITesting #SecurityInAI #AIInfrastructure #ModelDeployment #ScalableAI #InferenceOptimization #GPUOptimization #CacheStrategy #HighAvailabilityAI #DistributedAI #ModelServing #FutureOfAI #EthicalAI #ResponsibleAI #AIProductDesign #ProductionAI #AIInProduction #AIForGood

For detailed insights, please visit my blog at

Comments

Post a Comment