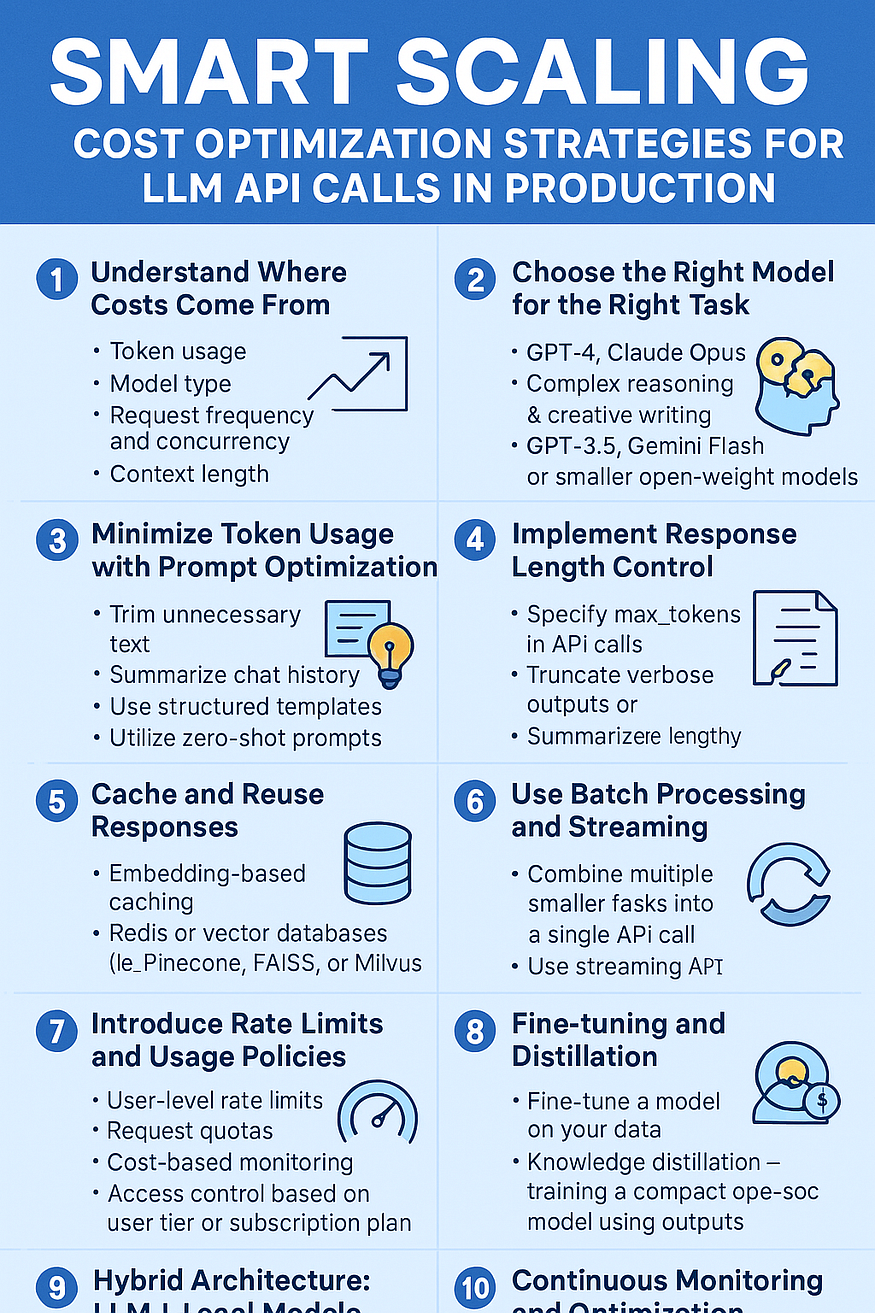

Taming the Beast: Cost Optimization Strategies for LLM API Calls in Production

Large Language Models (LLMs) are revolutionizing how we interact with technology, but their power comes at a price. Deploying and scaling LLM applications in production can lead to significant API costs, quickly burning through budgets if not managed effectively.

This blog explores practical and impactful strategies to optimize the cost of your LLM API calls, ensuring that you can deliver powerful AI-driven experiences without breaking the bank.

Understanding the Cost Drivers: What’s Eating Your Budget?

Before diving into optimization strategies, it’s essential to understand the key factors that influence the cost of LLM API calls:

- Token Usage (Input & Output): Most LLM providers charge based on the number of tokens processed in both the input prompt and the generated output. Longer prompts and more verbose responses directly increase costs.

- Model Choice: Different LLMs have different pricing structures. More powerful models (like GPT-4) typically cost more per token than smaller or less capable models (like GPT-3.5 Turbo).

- Request Frequency: The sheer number of API calls your application makes significantly impacts your overall cost. High-volume applications require careful optimization.

- Latency Requirements: Real-time applications often require lower latency, which can lead to higher costs due to the need for faster (and more expensive) inference endpoints.

- Region: Some regions may have pricing variances that you must account for

- Inactivity Check some calls may have no value, these should be checked.

Cost Optimization Strategies: A Toolkit for Savings

Before optimizing, it’s crucial to know what drives your LLM API costs:

- Token usage: Both input (prompt) and output (response) tokens count toward the total cost.

- Model type: Larger, more capable models (e.g., GPT-4, Gemini 1.5 Pro) cost more than smaller ones (e.g., GPT-3.5, Claude Haiku).

- Request frequency and concurrency: High-volume or parallel calls can multiply costs quickly.

- Context length: Long prompts or chat histories significantly increase token consumption.

Optimizing each of these factors can yield measurable savings.

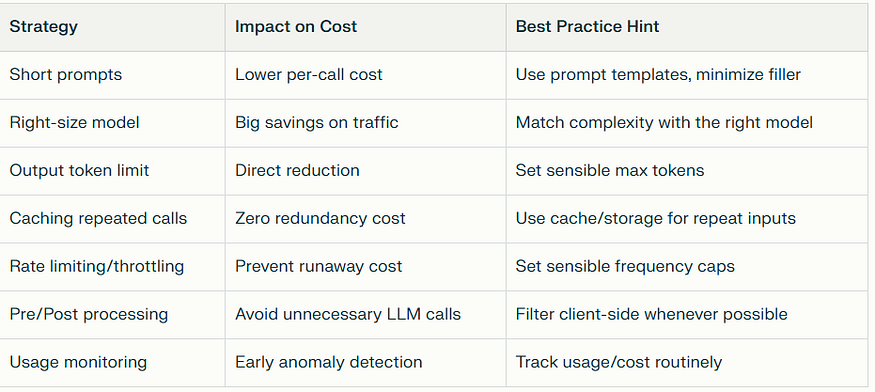

Here are actionable strategies to reduce your LLM API costs:

Prompt Optimization:

- Minimize Prompt Length: Craft concise and targeted prompts that provide the LLM with only the essential information needed to generate a relevant response. Remove unnecessary words, phrases, or examples.

- Use Clear Instructions: Clearly and explicitly instruct the LLM on the desired output format and length. This helps to avoid verbose responses that consume more tokens.

- Optimize Retrieval Augmented Generation (RAG): If using RAG, carefully select the most relevant context passages to include in the prompt. Avoid including irrelevant or redundant information. Review quality data you are pulling for correct content and avoid extra requests.

- Carefully Use Zero-Shot over Few-Shot prompting: The goal is to reduce costs and test what works

Every token costs money — and optimizing prompts can dramatically reduce API bills.

✅ Strategies:

- Trim unnecessary text: Keep prompts concise and remove redundant context.

- Summarize chat history: Instead of passing full history, send compact summaries or key points.

- Use structured templates: Define standardized prompt frameworks to control token length.

- Few-shot → zero-shot: When possible, switch from few-shot examples to well-engineered zero-shot prompts.

Example:

Instead of

“You are an expert data scientist. Given the following dataset and detailed instructions, please analyze correlations, generate graphs, and provide observations.”

Try

“Analyze correlations, visualize data, and summarize key insights.”

Model Selection:

- Choose the Right Model for the Task: Select the smallest and least expensive LLM that can still deliver the required level of performance for your specific use case. Don’t use a top-tier model if a smaller model can suffice. The better to test, or make less errors the cheaper to call.

- Evaluate Open-Source Alternatives: Explore open-source LLMs that can be self-hosted, eliminating API costs altogether. Be mindful of the hardware and maintenance costs associated with self-hosting.

Not every task needs the most powerful (and expensive) LLM.

Adopt a tiered model strategy:

- 🧠 Complex reasoning / creative writing: Use GPT-4 or Claude Opus.

- ⚙️ Routine queries, summaries, classification: Use GPT-3.5, Gemini Flash, or smaller open-weight models.

- 🧩 Internal pre-processing or filtering tasks: Use lightweight open-source models hosted on your infrastructure.

This “model routing” approach ensures you pay for intelligence only where it’s needed.

Caching:

- Implement Caching: Store the responses to frequently asked questions or common queries in a cache. Before making an API call, check if the response is already in the cache and serve the cached response if available. Make sure your models don’t get new data when they return to users. Also for privacy or security settings you may need to make it unique too. It depends,

- Consider Semantic Caching: For more complex queries, use semantic caching, where you store embeddings of previous queries and retrieve cached responses based on semantic similarity.

If your application makes repetitive queries (e.g., FAQs, code snippets, or summaries), caching can save huge costs.

Strategies:

- Implement embedding-based caching — use similarity search (e.g., cosine similarity) to detect near-duplicate queries.

- Use Redis or vector databases (like Pinecone, FAISS, or Milvus) to store prior responses.

- Maintain hash-based request-response logs for fast retrieval.

This can reduce API calls by 30–70% for certain workloads.

Batching and Throttling:

- Batch API Calls: Combine multiple requests into a single API call whenever possible. This reduces the overhead associated with making individual requests.

- Implement Throttling: Limit the number of API calls made per unit of time to prevent runaway costs. Implement queue management to handle spikes in traffic.

💡 Batch requests:

Combine multiple smaller tasks into a single API call when possible — this reduces overhead and total token counts.

⚡ Streaming responses:

Use streaming APIs to process responses incrementally, reducing latency and allowing early termination when enough data is received.

Asynchronous Processing:

- Use Asynchronous API Calls: Offload non-critical API calls to asynchronous processing queues. This allows you to defer processing until off-peak hours or when resources are more available.

Response Validation and Truncation:

- Validate Responses: After receiving a response from the LLM, validate that it meets your requirements (e.g., format, length, accuracy). If the response is invalid, attempt to correct it or generate a new response. But do not do this automatically, and it requires analysis before setting.

- Truncate Long Responses: If the LLM generates a response that is longer than necessary, truncate it to reduce token usage. The better to minimize cost than the effort.

Specify max_tokens in your API calls to prevent runaway responses.

For most applications, a concise answer is enough — avoid letting the model generate unnecessary paragraphs.

Additionally, use post-processing logic to:

- Truncate verbose outputs, or

- Summarize lengthy LLM responses before displaying to users.

Fine-Tuning for Specific Tasks:

- Fine-Tune When Appropriate: If you are performing a specific task repeatedly, consider fine-tuning a smaller LLM on a dataset relevant to that task. This can significantly improve performance and reduce costs compared to using a larger, general-purpose model.

If your use case involves repeated patterns (like customer queries or domain-specific writing), consider:

- Fine-tuning a smaller model on your data, or

- Knowledge distillation — training a compact open-source model using outputs from a larger one.

Once trained, host it locally or on cloud GPU instances. While initial setup has a cost, long-term inference becomes far cheaper than repeated API calls.

Introduce Rate Limits and Usage Policies:

In multi-user or production systems, user-level rate limits prevent runaway costs from heavy users or automated misuse.

Combine this with:

- Request quotas (per day/week/month)

- Cost-based monitoring (stop or warn when nearing thresholds)

- Access control based on user tier or subscription plan

This ensures sustainability as usage scales.

Pre/Post-processing:

- Pre-filter inputs and post-filter results programmatically to handle simple validations or error checks, only escalating to LLMs when strictly necessary.

- Use regular expressions or standard parsing for straightforward text manipulation rather

Call Frequency Control:

- Use request throttling and rate-limiting to prevent accidental overuse and manage bursts in traffic.

- Implement user quotas or session limits for end users if integrating LLMs into products.

Hybrid Architecture: LLM + Local Models:

A powerful cost-saving architecture involves:

- Edge or local models for preprocessing, classification, and intent recognition.

- LLM APIs only for high-level reasoning, creativity, or knowledge retrieval.

For example, in a chatbot:

- Local model detects intent and context.

- Only complex, ambiguous, or novel questions go to GPT-4.

This hybrid setup can reduce API calls by 40–60% without degrading user experience.

Monitoring and Analytics:

- Track Token Usage: Monitor token usage, request frequency, and other cost-related metrics to identify areas for optimization.

- Analyze Response Quality: Evaluate the quality of generated responses to identify potential issues with accuracy, bias, or toxicity

- Set Budgets and Alerts: Define clear budgets for LLM API costs and set up alerts to notify you when you are approaching your budget limits.

- Review Inactivity Metrics You might be getting 0 ROI so better cut than burn resources

Monitoring and Alerts:

- Track API usage, costs, and error rates using dashboards and set threshold alerts for anomalies or spikes.

- Regularly review cost breakdowns from your LLM provider for unused subscriptions or accidental high-consumption patterns.

Finally, measure everything.

Track:

- Token usage per feature

- Cost per user/session

- Model latency vs. performance

Use tools like:

- OpenAI Usage Dashboard, LangFuse, Helicone, or PromptLayer

- Custom monitoring with LangChain callbacks or SDK telemetry

These insights help continuously fine-tune prompts, choose models, and manage scaling intelligently.

API provider check

It’s also best if not always use only one of the good but expensive one. Please test the function for each and use a better performance for model and that can reduce the cost and increase the benefits for the company

Test more often

If possible, create test framework where you can check code, test and get the result to review.

Tools and Resources: Automating Cost Optimization

- LangChain: Provides tools for caching, prompt management, and other cost optimization techniques.

- MLflow: Can be used to track token usage and other metrics associated with LLM deployments.

- Prompt Layer: A comprehensive for evaluation.

Conclusion: A Sustainable Approach to GenAI

Optimizing the cost of LLM API calls is not a one-time fix but an ongoing process. By implementing the strategies outlined in this blog, carefully monitoring your usage, and continuously adapting your approach, you can ensure that your GenAI applications deliver maximum value while staying within budget. This allows your team and organization to have strong, trust and high performance and results

The future is with cost optimization.

Hashtags:

#LLM #AIinProduction #AICostOptimization #GenAI #PromptEngineering #APICosts #AIInfra #ModelRouting #AIEngineering #LangChain #MLOps

- Visit my blogs

- Follow me on Medium and subscribe for free to catch my latest posts.

- Let’s connect on LinkedIn / Ajay Verma

Comments

Post a Comment