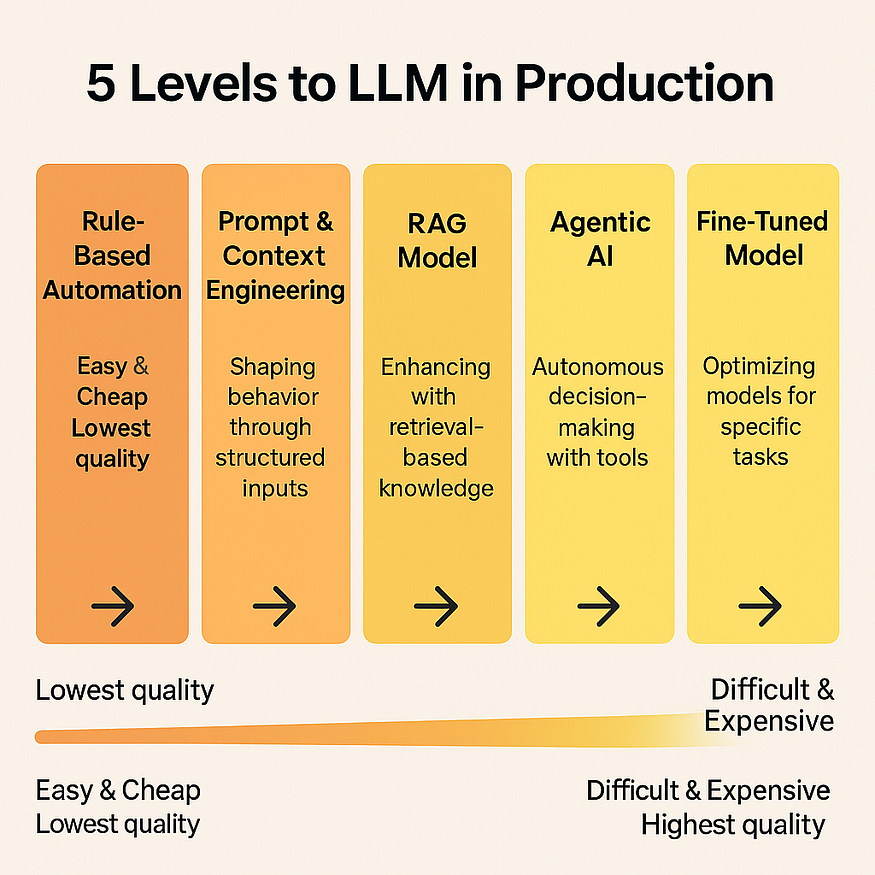

Beyond the Chatbot: The 5+1 Levels of LLM Maturity in Production

The “AI Hype” phase is over. We are now in the “AI Engineering” phase. For CTOs and AI Architects, the question is no longer if we should use Generative AI, but how to architect it for reliability, cost-efficiency, and domain accuracy.

Implementing LLMs in production isn’t a binary choice between “using ChatGPT” and “building your own model.” It is a spectrum of complexity. Below, we break down the 0 to 5 maturity model for GenAI applications, analyzing the trade-offs between cost, quality, and engineering effort.

Level 0: Rule-Based Automation – The Foundation Layer

The Baseline

Before we touch neural networks, we must acknowledge the foundation. Level 0 relies on deterministic logic — if/else statements, Regular Expressions (Regex), and rigid decision trees.

How it works: Input text is matched against pre-defined patterns. If a user says “Reset password,” the bot triggers the specific reset script.

When to Use:

- Structured data processing with known patterns

- Compliance-driven workflows requiring audit trails

- Low-budget prototypes and MVPs

- Scenarios where explainability is paramount

Challenges: extremely brittle. It fails instantly if the user deviates from the expected phrasing (e.g., “I’m locked out” might not trigger “Reset password”).

- Brittle systems that break with edge cases

- Requires extensive manual rule creation

- Cannot handle natural language nuances

- High maintenance burden as requirements evolve

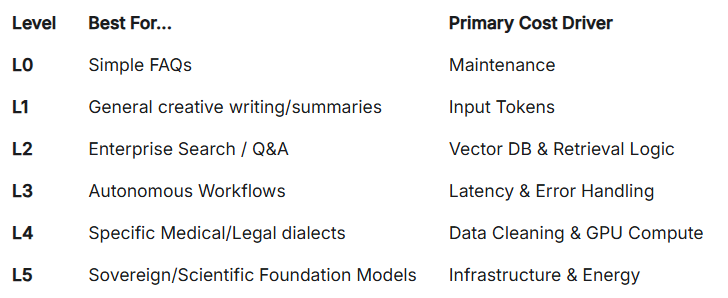

Cost vs. Quality: Minimal infrastructure costs ($100–500/month) but lowest flexibility. Quality peaks at 60–70% for narrow use cases but degrades rapidly outside defined parameters.

- Cost: $ (Extremely Low)

- Quality: Low (Zero adaptability)

Use Level 0 wherever the logic is stable and auditable (KYC checks, routing, policy enforcement), then augment with LLMs where variance is high.

Level 1: Prompt & Context Engineering – Unlocking LLM Potential

The “Programmer as Whisperer”

This is the entry point for most GenAI applications. We utilize powerful off-the-shelf models (GPT-4o, Claude 3.5, Gemini) and shape their behavior strictly through input text. This level harnesses pre-trained LLMs through strategic prompt design without modifying the underlying model. It’s the most accessible entry point for production AI.

Core idea:

- You rely on the model’s pre‑training plus whatever you can fit in the prompt (system message + user message + examples + small snippets of context).

- No external retrieval pipeline, no fine‑tuning; just smart prompt patterns and structured calling.

Key Techniques:

- Zero-Shot Prompting: Direct instructions without examples

- Few-Shot Prompting: Providing 3–5 examples of input/output pairs within the prompt to guide the model’s style and logic.

- Chain of Thought (CoT): Forcing the model to “think out loud” step-by-step before answering to improve reasoning accuracy.

- System Prompting: Defining the persona (“You are a Senior Legal Analyst…”) and constraints at the system level.

- Role-Based Prompting: Assigning expert personas (“You are a senior financial analyst…”)

- System-User-Assistant Patterns: Structured conversation design

- Constitutional AI Principles: Embedding ethical guidelines and constraints

Context Engineering Strategies:

- Document injection for domain specificity

- Template-based generation with variable slots

- Output format specification (JSON, XML, markdown)

- Constraint definition (tone, length, technical level)

Challenges: Limited by the Context Window. You cannot stuff an entire library of manuals into a prompt. Latency increases as prompts get longer.

- Prompt brittleness across model versions

- Context window limitations (4K-200K tokens)

- Inconsistent outputs requiring retry logic

- Difficult to version control and test systematically

- Prompt injection vulnerabilities

Cost vs. Quality: API costs of $500–3,000/month for moderate usage. Quality reaches 70–82% for well-engineered prompts. Ideal for content generation, customer support, and basic analysis tasks.

- Cost: (Pay per token)

- Quality: Medium (High general intelligence, but prone to hallucinations on specific domain data).

Level 2: RAG (Retrieval-Augmented Generation) – Bridging Knowledge Gaps

Grounding the Model

RAG solves the hallucination problem by connecting the LLM to your private data. The model doesn’t “memorize” your data; it “reads” it on the fly.

Core idea:

- Ingest data → chunk + embed → index in a vector / hybrid store → retrieve top‑K relevant chunks per query → feed chunks + query into the LLM for generation.

- This makes knowledge updatable without retraining, and is powerful for documentation, FAQs, policies, and domain content.

The Evolution of RAG:

1. Basic RAG (Vector Search) : Chunks text, stores in a Vector Database (Pinecone/Milvus), and retrieves based on semantic similarity.

- Embeds documents into vector databases (Pinecone, Weaviate, Chroma)

- Retrieves top-k semantically similar chunks

- Concatenates retrieved context with user query

2. GraphRAG : Uses Knowledge Graphs (Neo4j) to understand relationships between entities. If you ask about “Project X,” GraphRAG knows “Project X” is owned by “Alice” who works in “Finance,” providing deeper context than simple keyword matching.

- Builds knowledge graphs from documents

- Enables relationship-based reasoning and multi-hop queries

- Excels at complex questions requiring entity connections

- Example: “How are Company A’s suppliers affected by Company B’s patent?”

3. Agentic RAG : Instead of a single retrieval, the system acts as a researcher. It may query the database, realize the info is insufficient, generate a new query, and search again (Iterative Retrieval).

- LLM decides when and what to retrieve

- Multi-step retrieval with query refinement

- Self-correcting mechanisms based on answer quality

- Integrates multiple data sources dynamically

4. Hybrid RAG

- Combines dense (semantic) and sparse (keyword) retrieval

- Reranking models improve precision

- Metadata filtering for targeted search

Implementation Architecture:

User Query → Query Embedding → Vector Search →

Reranking → Context Assembly → LLM Generation → ResponseChallenges: “Garbage in, Garbage out.” If your retrieval system fetches the wrong document, the LLM gives the wrong answer.

- Chunking strategy impacts quality (size, overlap, semantic boundaries)

- Embedding model selection and maintenance

- Retrieval latency (50–300ms added to response time)

- Context stuffing overwhelming LLM focus

- Outdated or contradictory source handling

- Infrastructure complexity (vector DBs, embedding pipelines)

Cost vs. Quality: Infrastructure costs $1,000–8,000/month (vector DB, embeddings, LLM calls). Quality reaches 82–90% with proper tuning. Essential for customer support, technical documentation, and knowledge-intensive applications.

- Cost: $ (Vector storage + Inference costs)

- Quality: High (Factual, grounded, and auditable).

RAG is often the first “serious” production level for enterprises because it keeps the base model generic while making answers domain-aware and auditable.

Level 3: Agentic AI– Autonomous Decision-Making Systems

From “Chatting” to “Doing”

At this level, the LLM stops being just a text generator and becomes a reasoning engine that orchestrates workflows. Agentic AI represents a paradigm shift where LLMs become orchestrators that plan, execute, and iterate across multi-step workflows. This is the shift from Knowledge to Action.

How it works: The Agent is given a goal (“Analyze this CSV and email the summary to the CEO”). It breaks the goal into sub-tasks, selects the right tools (Python REPL, Search API, Gmail API), executes them, and critiques its own results.

Core Agentic Capabilities:

- Planning: Breaking complex tasks into subtasks

- Tool Use: Calling APIs, databases, search engines, code executors

- Memory: Maintaining conversation and execution history

- Reflection: Self-critique and error correction

- Collaboration: Multi-agent systems with specialized roles

Top Frameworks:

1. LangGraph

- Graph-based state machines for agent workflows

- Explicit control flow with cycles and conditionals

- Built-in persistence and human-in-the-loop

- Best for: Complex, deterministic workflows

2. AutoGPT / BabyAGI

- Goal-driven autonomous task execution

- Self-generating task lists

- Best for: Research and exploration tasks

3. CrewAI

- Multi-agent collaboration with role assignment

- Sequential and parallel task execution

- Best for: Team-like workflows (researcher + writer + critic)

4. Microsoft Semantic Kernel

- Enterprise-grade plugin architecture

- Native Azure integration

- Best for: Corporate environments with strict governance

5. LlamaIndex Agents

- Data-focused agent framework

- Query planning over structured/unstructured data

- Best for: Analytics and business intelligence

6. Haystack agents, semantic kernels, and cloud-native orchestrators (Azure, AWS, GCP stacks): combine LLM calls with classic microservices and event-driven infra.

Real-World Agent Pattern:

User Request → Planner Agent → [Research Agent, Data Agent, Code Agent]

→ Synthesizer Agent → Quality Checker → Final OutputChallenges: Infinite loops (agents getting stuck), high latency, and compounding error rates (one wrong step ruins the workflow).

- Execution unpredictability and infinite loops

- Cost explosion (agents can make 10–50+ LLM calls per task)

- Debugging complexity across multi-step workflows

- Latency (2–30 seconds for complex tasks)

- Security risks from tool access and code execution

- Reliability at ~60–75% for complex multi-step tasks

Cost vs. Quality: $3,000–20,000/month depending on usage. Quality reaches 85–92% for tasks within agent capabilities but can fail catastrophically on edge cases. Critical for research automation, complex data analysis, and workflow orchestration.

- Cost: (High token usage due to reasoning loops)

- Quality: Very High (Capable of complex problem solving, not just Q&A).

Level 3 is where you move from “chat with my data” to “AI that can do work,” but it demands serious investment in testing, governance, and LLMOps.

Level 4: Fine-Tuned Models– Domain Specialization

Specialization via Weights

Fine-tuning adapts pre-trained models to specific domains or tasks by training on curated datasets, embedding knowledge directly into model weights. When prompt engineering isn’t enough to change the behavior or format of a model, we turn to fine-tuning. This updates the actual neural weights of the model.

Core idea:

- Start with a general base model (open or proprietary) and train it further on curated, labeled or preference data:

- Domain QA pairs, support tickets, legal opinions, medical notes (subject to compliance).

- Task-specific data: code, SQL, extraction labels, classification tags.

Fine-Tuning Approaches:

1. Full Fine-Tuning

- Updates all model parameters

- Requires significant compute (GPU clusters)

- Best for: Complete domain adaptation

- Cost: $5,000–50,000 per training run

2. Parameter-Efficient Fine-Tuning (PEFT) : PEFT (Parameter-Efficient Fine-Tuning) / LoRA: Instead of retraining the whole model, we train a tiny adapter layer (Low-Rank Adaptation). It’s cheap and fast.

3. LoRA (Low-Rank Adaptation)

- Trains small adapter layers (0.1–1% of parameters)

- Reduces compute by 90%+

- Maintains base model performance

- Cost: $500–5,000 per training run

4. QLoRA (Quantized LoRA)

- Combines LoRA with 4-bit quantization

- Enables fine-tuning on consumer GPUs

- Minimal quality degradation

5. Instruction Tuning

- Focuses on task-following capabilities

- Uses structured instruction-response pairs

- Ideal for consistent output formats

6. Post-training (RLHF/RLAIF, DPO, etc.): RLHF (Reinforcement Learning from Human Feedback) Using human feedback to align the model’s preferences (e.g., making it safer or more concise).

- Aligns model behavior with human preferences

- Requires extensive human annotation

- Used for safety and quality improvements

7. SFT (Supervised Fine-Tuning): Training on a dataset of “Golden Answers” to teach the model a specific medical or legal dialect.

When to Fine-Tune:

- Specialized terminology or jargon (medical, legal, technical)

- Consistent formatting requirements

- Latency-critical applications (smaller fine-tuned models faster than prompted large models)

- Proprietary task patterns not in base training data

Challenges: Data preparation. You need thousands of high-quality, cleaned examples. It also introduces “Catastrophic Forgetting” (the model learns your data but forgets general English).

- Data collection and curation (requires 1,000–100,000+ examples)

- Catastrophic forgetting (model loses general capabilities)

- Overfitting on small datasets

- Training infrastructure and expertise requirements

- Model versioning and deployment complexity

- Evaluation framework design

- Retraining costs when knowledge needs updating

Cost vs. Quality: Setup $10,000–100,000, ongoing $2,000–15,000/month. Quality reaches 90–96% for narrow domains. Essential for specialized industries, consistent behavior requirements, and applications requiring smaller model footprints.

- Cost: (Compute for training + Hosting custom models)

- Quality: High Domain Specificity (Perfect for specific output formats or niche languages).

Level 5: Full Trained Model (Pre-training)– The Ultimate Customization

The Sovereign Approach

The highest level of difficulty. This involves training a model from scratch (like BloombergGPT) on raw data. Building foundation models from the ground up offers maximum control but demands massive resources. This is the domain of AI research labs and large enterprises.

Core idea:

- Own the full or near-full stack of pretraining + post-training for a specific domain: legal, medical, finance, scientific, code, or even a particular language/locale.

- You might still add RAG and agents on top, but the base model itself is deeply specialized.

Who is this for? Sovereign nations, massive enterprises with unique data (biotech/genomics), or companies that need total IP ownership and privacy guarantees.

The Process: Curating terabytes of data, managing clusters of thousands of H100 GPUs, and months of training time.

The Training Pipeline:

1. Data Collection and Curation

- Gathering petabytes of diverse text data

- Cleaning, deduplicating, filtering toxic content

- Creating balanced datasets across domains

- Typical corpus: 1–10 trillion tokens

2. Architecture Design

- Transformer configurations (layers, attention heads, dimensions)

- Tokenization strategy

- Context window engineering

- Efficiency optimizations (Flash Attention, MoE)

3. Pretraining

- Self-supervised learning on massive compute clusters

- Training for weeks to months on thousands of GPUs

- Intermediate checkpointing and evaluation

- Scaling law optimization

4. Post-Training

- Instruction tuning for task-following

- RLHF for alignment

- Safety filtering and red teaming

- Distillation for efficiency

When Training Makes Sense:

- Unique data moats (proprietary industry data)

- Specific architectural requirements (edge deployment, novel modalities)

- Strategic model ownership and independence

- Research and competitive differentiation

Challenges: Astronomical costs, deep ML expertise required, and massive infrastructure overhead.

- Compute costs: $2–100 million per training run

- Infrastructure complexity (distributed training, networking, storage)

- Talent acquisition (ML researchers, infrastructure engineers)

- Training stability (convergence issues, loss spikes)

- Evaluation and benchmarking at scale

- Ongoing maintenance and updates

- Time to market (6–18 months from start to production)

Cost vs. Quality: Initial investment $5–100+ million, ongoing $500K-5M+/month. Quality can reach 95–99% for intended use cases with proper execution. Reserved for organizations with exceptional resources and strategic imperatives requiring full model ownership.

- Cost: $ (Millions of dollars)

- Quality: Highest possible control (The model is your data).

Choosing Your Approach: A Decision Framework

Start with Level 1 if you’re:

- Prototyping or validating product-market fit

- Working with limited budgets (<$5K/month)

- Solving general-purpose problems

Move to Level 2 (RAG) when you need:

- Up-to-date or proprietary information access

- Reduced hallucinations

- Explainable information sources

Adopt Level 3 (Agents) for:

- Complex, multi-step workflows

- Dynamic decision-making requirements

- Task automation beyond simple Q&A

Invest in Level 4 (Fine-Tuning) if you require:

- Consistent specialized behavior

- Lower latency with smaller models

- Domain-specific terminology and patterns

Consider Level 5 (Training) only with:

- $50M+ budget and strategic necessity

- Unique data advantages

- Long-term model ownership goals

The Hybrid Reality

Most production systems combine multiple levels. A typical enterprise deployment might use:

- Level 1 for rapid prototyping and simple tasks

- Level 2 for knowledge retrieval and grounding

- Level 3 for workflow orchestration

- Level 4 for specialized, high-volume components

The key is matching complexity to requirements while maintaining manageable costs and operational overhead.

Conclusion

The journey from prompts to production-grade AI is not linear — it’s a strategic choice based on your resources, requirements, and risk tolerance. Start simple, measure rigorously, and scale complexity only when simpler approaches demonstrably fail.

Success in production AI comes not from using the most sophisticated approach, but from deploying the simplest system that reliably solves your problem. As the field evolves, the lines between these levels continue to blur, with platforms offering hybrid solutions that combine the best of multiple approaches.

The future of LLM deployment lies not in choosing a single level, but in orchestrating the right combination for your unique challenges.

Summary: Which Level do you need?

Start at Level 1. Move to Level 2 for data. Move to Level 3 for automation. Only touch Level 4 or 5 if you have a problem that RAG cannot solve.

#AILevels #TechIllustration #FutureOfWork #AIInfographic #LLM #GenAI #RAG #AgenticAI #MLOps #LLMOps #AIArchitecture #AIML #EnterpriseAI #AIProduct

- Visit my blogs

- Follow me on Medium and subscribe for free to catch my latest posts.

- Let’s connect on LinkedIn / Ajay Verma

Comments

Post a Comment