The Evolving Symphony of Operations: From DevOps to AgentOps

The world of software development and artificial intelligence is a dynamic landscape, constantly pushing the boundaries of what’s possible. As our creations become more complex and intelligent, so too does the need for sophisticated operational frameworks to manage their lifecycle. What began as a quest for speed and reliability in traditional software has blossomed into a nuanced ecosystem of “Ops” disciplines, each designed to tackle the unique challenges of modern AI. Let’s embark on a journey through the evolution of operations, exploring the “whys” and “whats” of each crucial step.

The Dawn of Agility: DevOps

The progenitor of all modern Ops, DevOps emerged from the frustration of siloed development and operations teams. Developers, focused on creating features, often clashed with operations, responsible for stability and deployment. The goal of DevOps was simple yet revolutionary: to break down these barriers, fostering collaboration, automation, and continuous delivery.

What it solved:

- Slow release cycles.

- Manual, error-prone deployments.

- Blame games between teams.

- Lack of feedback loops between development and production.

Limitations: While a massive leap forward for traditional software, DevOps wasn’t inherently equipped for the unique demands of machine learning models. The lifecycle of an ML model involves data pipelines, model training, hyperparameter tuning, and continuous re-training — elements largely absent in conventional software.

Intelligent Operations: AIOps (Artificial Intelligence for IT Operations)

As IT environments grew exponentially complex with microservices, cloud deployments, and massive data streams, human operators became overwhelmed. This is where AIOps stepped in. AIOps applies AI and machine learning techniques to automate and enhance IT operations, providing intelligent insights, anomaly detection, and automated responses across the entire IT infrastructure. It’s not a standalone “Ops” but rather an intelligent layer that can augment DevOps, MLOps, and others.

What it solved:

- Information overload from monitoring tools.

- Slow incident detection and resolution.

- Reactive rather than proactive problem-solving.

- Identifying root causes in complex, distributed systems.

- Predicting potential outages before they occur.

Limitations: While powerful for infrastructure and application monitoring, AIOps primarily focuses on the IT environment’s operational health. It doesn’t inherently address the unique lifecycle management of AI models themselves (data versioning, model retraining, ethical AI considerations, etc.), which are the domain of MLOps and subsequent disciplines.

Taming the Model Menagerie: MLOps

As machine learning models moved from research labs to critical production systems, the need for a specialized operational framework became undeniable. MLOps picked up where DevOps left off, extending its principles to the entire machine learning lifecycle. It focuses on automating and streamlining the process of building, deploying, and managing ML models. AIOps can certainly enhance MLOps by providing intelligent monitoring of the ML infrastructure and data pipelines, but MLOps retains responsibility for the model lifecycle itself.

What it solved:

- Manual and inconsistent model deployments.

- Lack of version control for data and models.

- Difficulties in reproducing model results.

- Challenges in monitoring model performance drift in production.

- Siloed data scientists and ML engineers.

Limitations: MLOps, while powerful for traditional ML models, still operates on the assumption of fixed model architectures and predictable data pipelines. The rise of large language models (LLMs) and generative AI brought new complexities that MLOps, in its initial form, couldn’t fully address.

Orchestrating the Giants: LLMOps

The explosion of Large Language Models (LLMs) like GPT-3 and beyond introduced a new paradigm. These models are not just “trained” in the traditional sense; they are often pre-trained on vast datasets, fine-tuned, and then prompted with intricate instructions. LLMOps focuses on the specialized challenges of deploying, managing, and continuously improving these massive, versatile models.

What it solved:

- Managing colossal model sizes and infrastructure requirements.

- Prompt engineering and versioning.

- Evaluating LLM outputs for quality, bias, and safety.

- Efficient fine-tuning and adaptation to specific tasks.

- Monitoring latency and cost of inference.

Limitations: LLMOps is excellent for managing and deploying existing LLMs. However, it doesn’t fully encompass the broader challenges of building and managing generative AI solutions that might combine multiple models, custom architectures, or novel forms of data generation.

Beyond Prediction: GenOps (Generative AI Operations)

Generative AI isn’t just about understanding existing data; it’s about creating new data — images, text, code, audio, and more. GenOps represents the operational framework for the entire generative AI ecosystem. It’s about managing the lifecycle of generative models, from initial concept and data curation to model training, output evaluation, and ethical deployment.

What it solved:

- Managing diverse generative model types (GANs, VAEs, Diffusion Models, etc.).

- Evaluating the quality, creativity, and fidelity of generated content.

- Addressing ethical concerns like deepfakes, bias amplification, and intellectual property.

- Orchestrating complex multi-modal generative pipelines.

- Facilitating human-in-the-loop feedback for refinement of generated content.

Limitations: While GenOps tackles the lifecycle of generative models, it primarily focuses on the “what” and “how” of generating. It might not deeply delve into the computational efficiency and resource optimization aspects that become critical at scale.

The Efficiency Imperative: FLOps (Floating Point Operations)

This isn’t an “Ops” in the same sequential sense, but rather a crucial metric and a subsequent area of operational focus. FLOps (Floating Point Operations) refers to the number of mathematical operations performed by a model. As models become larger and more complex, especially generative ones, the computational cost becomes a significant factor for deployment, energy consumption, and environmental impact.

Why it became critical:

- Edge Devices: Deploying large models on resource-constrained devices (phones, IoT) requires highly efficient architectures.

- Sustainability: Reducing the carbon footprint of AI training and inference.

- Cost Optimization: Lowering cloud computing expenses for model serving.

- Real-time Applications: Ensuring models can run with low latency.

The focus on FLOPs often leads to the development and operationalization of techniques like model pruning, quantization, and efficient architecture search, which can collectively be considered an operational concern within the broader GenOps framework.

The Future of Autonomy: AgentOps

The ultimate evolution of “Ops” is driven by the rise of AI agents — autonomous entities capable of understanding goals, planning actions, and interacting with their environment. AgentOps is the framework for designing, deploying, monitoring, and continuously improving these intelligent agents. It’s about managing not just a single model, but a system that can reason, learn, and adapt.

Why it’s the next frontier:

- Complex Tasks: Agents can break down complex problems into sub-tasks and execute them.

- Dynamic Environments: Agents need to operate reliably in unpredictable real-world scenarios.

- Continuous Learning: Agents must adapt and improve their behavior over time through interaction.

- Safety and Control: Ensuring agents operate within ethical boundaries and can be controlled or halted.

- Interoperability: Managing interactions between multiple agents and external systems.

- Human-Agent Collaboration: Developing frameworks for seamless human oversight and intervention.

Imagine an AI agent designed to manage a smart factory, optimizing production lines, predicting maintenance needs, and even interacting with human workers. This requires not just robust models, but an entire operational system to ensure the agent’s continuous effectiveness, safety, and alignment with human goals. AgentOps will involve sophisticated monitoring of agent behavior, continuous evaluation of their decision-making processes, and frameworks for safely updating and refining their underlying intelligence.

The journey of Ops reflects the increasing sophistication of our technological ambitions. From ensuring software stability to orchestrating autonomous intelligence, each new “Ops” discipline builds upon its predecessors, addressing emerging complexities and paving the way for the next wave of innovation. The symphony continues, ever evolving towards more intelligent, efficient, and autonomous systems.

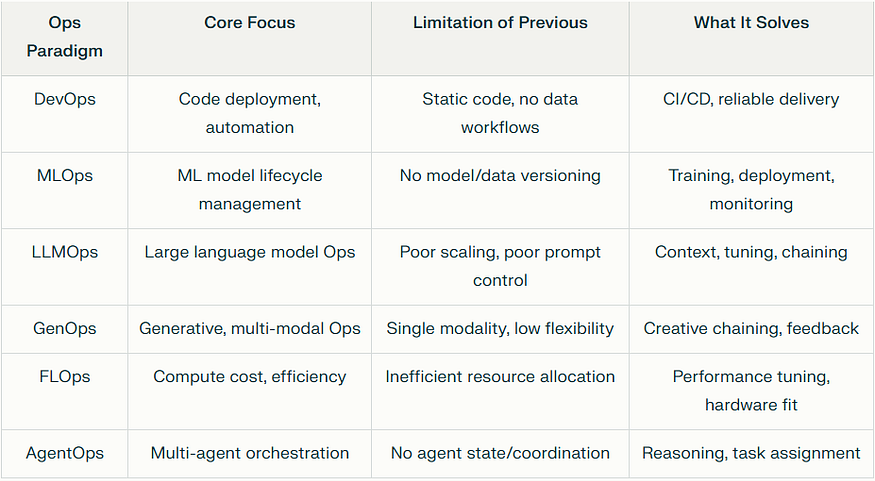

Summary Table: Evolution of Ops Disciplines

Why Each Next ‘Ops’ Was Needed

The DevOps Revolution

DevOps began as a cultural and operational shift bridging the gap between software development and IT operations. Its goal was to enable continuous integration, delivery, and rapid deployment cycles, eliminating bottlenecks between teams that traditionally worked in silos. DevOps unlocked faster app delivery, greater reliability, and tighter feedback loops. However, DevOps was fundamentally designed around static code bases and deterministic outputs, struggling to address the complexity and iterative nature of machine learning systems.

Rise of MLOps: Operations for Machine Learning

With the surge of ML use cases, teams quickly realized that deploying and managing machine learning workflows required new tools and practices. MLOps extends DevOps principles to the data-centric nature of ML, handling model training, versioning, monitoring, and reproducibility. It automates retraining, ensures seamless deployment, and tracks data/model drift. But as ML matured to deep learning and generative AI, MLOps encountered its limitations — primarily around resource management, compute scaling, and validation pipelines for increasingly massive models.

LLMOps: Scaling for Large Language Models

Large Language Models (LLMs) like GPT-4 and their transformers require orchestration far beyond regular ML models. LLMOps emerged to answer this need, focusing on efficient deployment, prompt engineering, context management, and continuous evaluation in multi-tenant environments. It enables fine-tuning, cost optimization (e.g., API call management), and safeguarding data privacy. LLMOps marks a shift from managing just datasets and models to handling context, agent states, and model chaining. But even these systems have begun showing strain with multi-agent workflows, higher compute needs, and the complexity of generative intelligence.

GenOps: Operations for Generative AI

GenOps is the operational discipline tailored for generative AI — covering not only text models but also image, audio, and multi-modal generative engines. Unlike MLOps or LLMOps, GenOps solves for dynamic prompt workflows, compound model chains, and reuse of outputs (e.g., retrieval-augmented generation). It supports adaptive orchestration of multiple agents, multi-modal pipelines, and advanced evaluation metrics. GenOps workflows require active management of “creativity,” versioning of dynamic assets, and real-time feedback mechanisms. Nonetheless, GenOps often grapples with the underlying challenge of compute cost and complexity as models grow larger.

FLOps: Measuring Computational Complexity

The FLOPs (Floating Point Operations) paradigm brings focus to computational efficiency. In ML and deep learning, FLOPs measure the actual number of mathematical operations models perform, serving as a proxy for computational cost, energy consumption, and speed, especially when deploying on edge devices or scaling in production. Tracking FLOPs helps teams optimize resource usage, balance accuracy with cost, and pick architectures that suit their infrastructure. However, FLOPs alone don’t solve operational management — they complement the Ops stack by guiding hardware-resource decisions rather than lifecycle management.

AgentOps: Operations for AI Agents

Most recently, AgentOps has emerged to orchestrate multi-agent AI systems — akin to ensembles of intelligent models capable of reasoning, planning, and collaboration. AgentOps deals with state management, coordination, scoring, peer review, and dynamic task assignment among agents. It applies not just to LLMs but also to hybrid systems, integrating discrete knowledge, reasoning, and autonomous behaviors. AgentOps supports rapid composition and evaluation of intelligent workflows in domains like healthcare, insurance, business logistics, and more, bringing intelligent orchestration to the next level.

Each step in the Ops journey was triggered by a real limitation of the previous discipline:

- DevOps couldn’t handle iterative model workflows and data dependencies.

- MLOps struggled with compute-heavy DL/LLM workloads and context management.

- LLMOps needed more granular control over prompts, conversational context, and response orchestration.

- GenOps was required for creative, multi-modal models demanding novel lifecycle workflows.

- FLOPs tracking became essential as models grew too large for naïve deployment and resource constraints hit production.

- AgentOps now orchestrates the next-generation intelligent agents with dynamic state, coordination, and collaborative behaviors.

Final Thoughts

The Ops journey mirrors our technological evolution — from manual to automated, from automated to intelligent.

Each phase built the foundation for the next — driven by necessity, innovation, and the quest for autonomy.

The future isn’t just “DevOps for AI.”

It’s AgentOps for Humanity — where systems don’t just operate efficiently but think, adapt, and collaborate intelligently.

Conclusion

The journey of Ops paradigms reflects the accelerating demands of modern AI and automation. Each new Ops discipline is born out of necessity, driving innovation and helping teams adapt to ever-evolving technologies. As agentic AI and multi-modal intelligence become the norm, mastering these operational workflows becomes central to success — bringing together scalability, efficiency, and intelligence in every deployment.

#AIOps #DevOps #MLOps #LLMOps #GenOps #FLOps #AgentOps #AIChatbot #AI #MachineLearning #GenerativeAI #TechEvolution #Operations #FutureOfAI #TechBlog #AI #AIEvolution #OperationalIntelligence #AgenixAI

- Visit my blogs

- Follow me on Medium and subscribe for free to catch my latest posts.

- Let’s connect on LinkedIn / Ajay Verma

Comments

Post a Comment